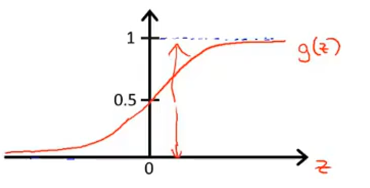

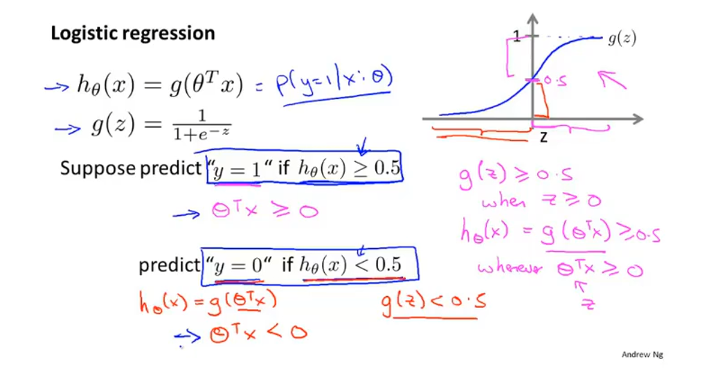

sigmoid function also logistic function

“分类问题”–逻辑回归算法

决策边界 不是训练集的属性,而是假设本身和其参数的属性

计算的是 属于1 的概率

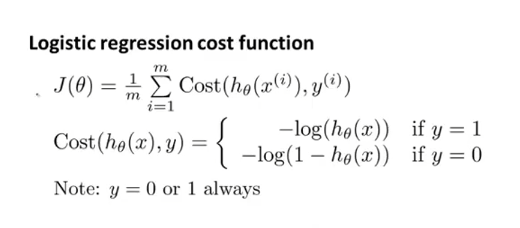

cost function

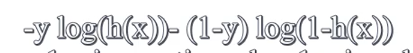

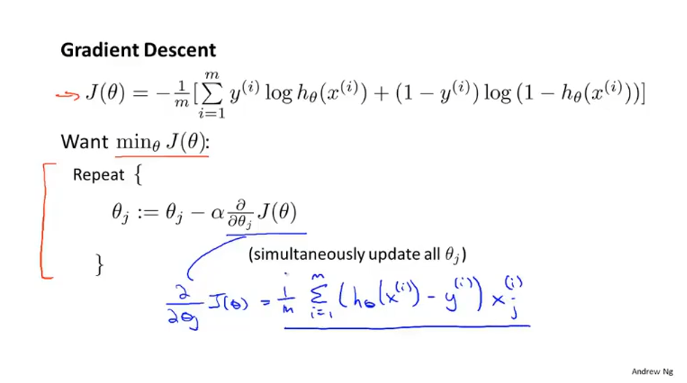

适用于梯度下降的变形式:

综上:

来源于统计学的极大似然估计法—-凸函数

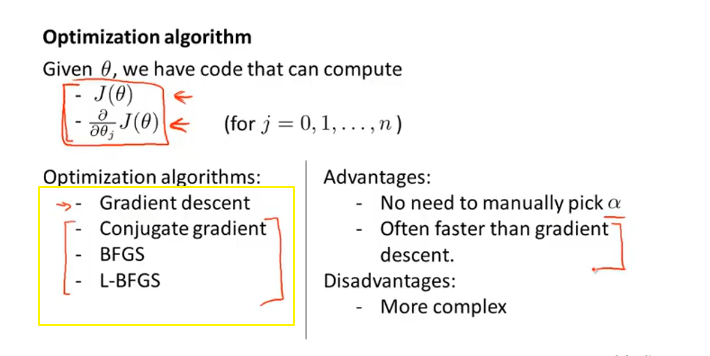

高级优化

使用库~直接调用,适用于大型的机器学习

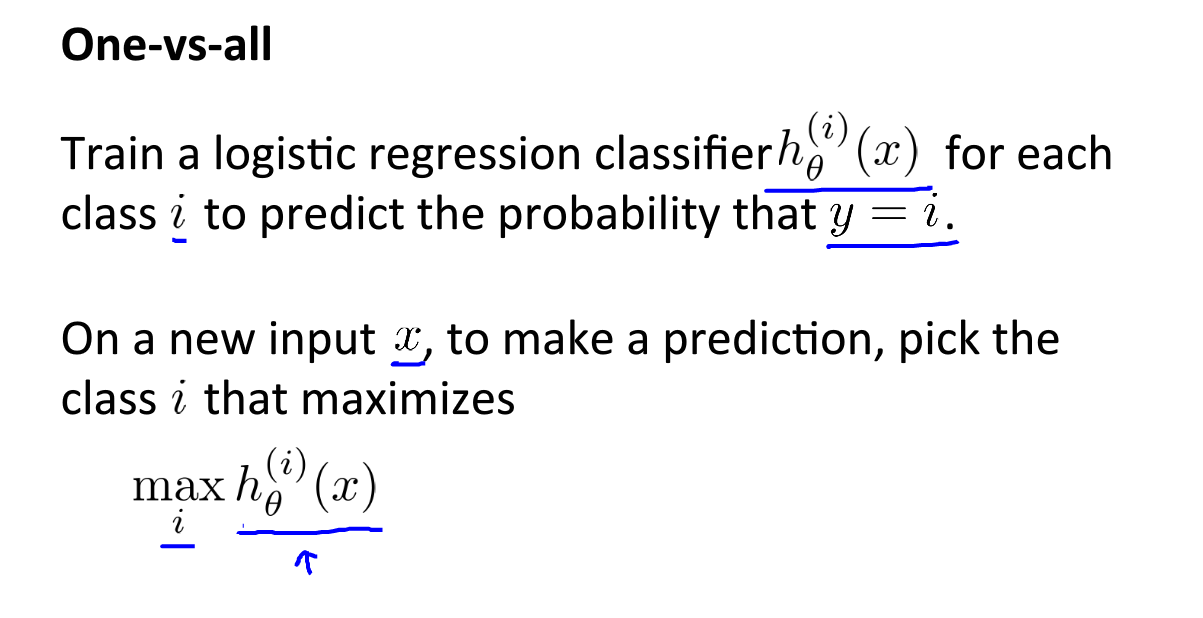

多元分类:”一对多“分类

采用多个分类器,针对其中一种情况进行训练

拟合

泛化 :一个假设模型应用到新样本的能力~

欠拟合/过拟合 高方差

减少特征变量

但是同时舍弃了一些有用信息

正则化

简化假设模型,更少的倾向过拟合

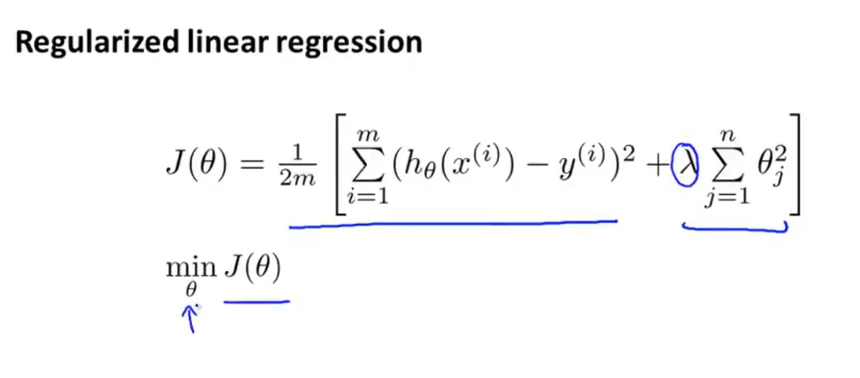

线性回归正则化

后一项是正则化项

1 | 不惩罚 θ_0 |

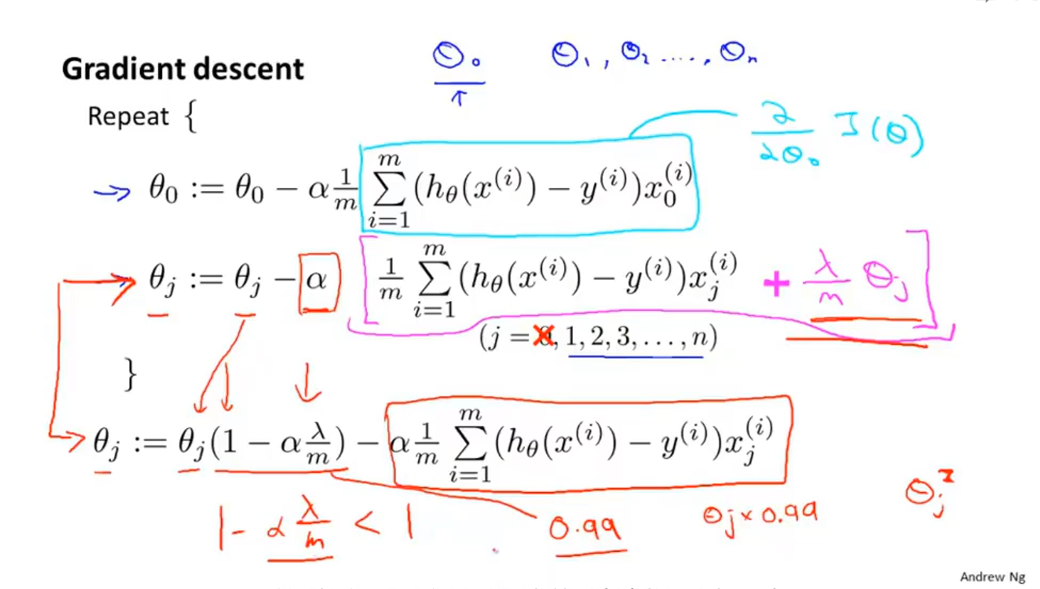

(1)梯度下降:

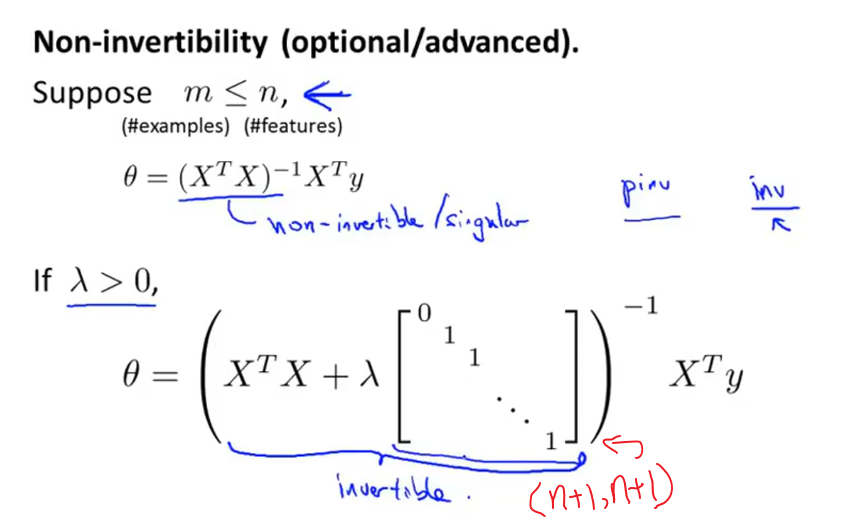

(2)正则化方程:

虽然octave在一般的正则化方程中 pinv() 可能会给 奇异矩阵 可逆结果,但是这不能泛化。但经过正则化 正则方程后,一定是可逆的。

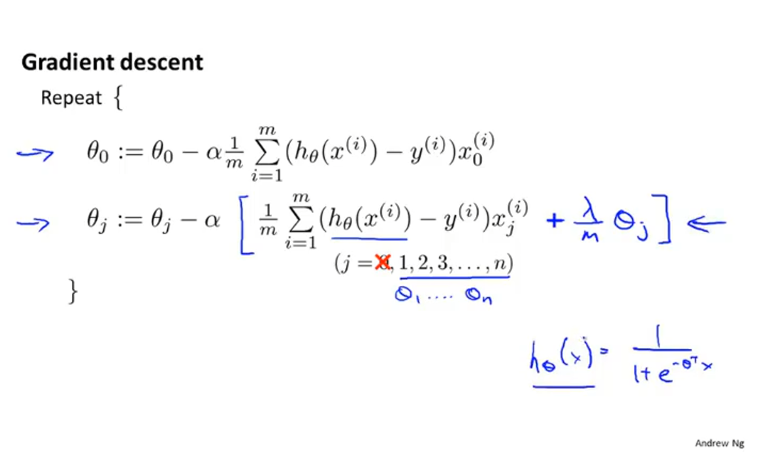

逻辑回归正则化

1 | 不惩罚 θ_0 |

注意:虽然看起来和 线性回归一样,但是 h(x)不同

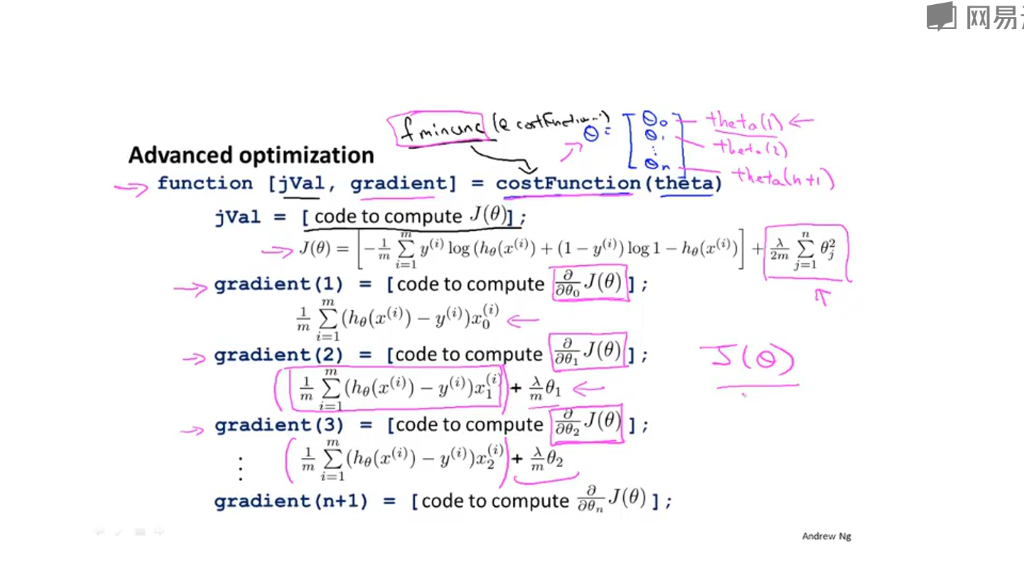

(3)高级算法:

fminunc() :函数在无约束条件下的最小值

不需要写任何循环,也无需设置循环;只需要提供一个计算“代价”和“梯度”的函数,就返回正确的优化参数、代价值、θ

tips: octave下标从1开始。。

编程作业

costFunction.m

1 | function [J, grad] = costFunction(theta, X, y) |

costFunctionReg.m

1 | function [J, grad] = costFunctionReg(theta, X, y, lambda) |

plotData.m

1 | function plotData(X, y) |

predict.m

1 | function p = predict(theta, X) |

sigmoid.m

1 | function g = sigmoid(z) |